what causes delay in connecting to a webpage tweaks

Site operation is potentially the almost important metric. The amend the performance, the improve chance that users stay on a page, read content, make purchases, or just about whatever they need to practise. A 2017 study by Akamai says as much when information technology plant that even a 100ms delay in page load can subtract conversions by 7% and lose i% of their sales for every 100ms it takes for their site to load which, at the fourth dimension of the study, was equivalent to $one.6 billion if the site slowed down by but one 2nd. Google'due south industry benchmarks from 2018 also provide a striking breakdown of how each second of loading affects bounce rates.

On the flip side, Firefox fabricated their webpages load 2.two seconds faster on average and it collection threescore million more than Firefox downloads per yr. Speed is likewise something Google considers when ranking your website placement on mobile. Having a slow site might exit you lot on page 452 of search results, regardless of any other metric.

With all of this in mind, I idea improving the speed of my own version of a tiresome site would exist a fun exercise. The code for the site is available on GitHub for reference.

This is a very basic site fabricated with simple HTML, CSS, and JavaScript. I've intentionally tried to keep this as simple as possible, meaning the reason it is slow has nix to do with the complexity of the site itself, or considering of some framework information technology uses. About the about complex part are some social media buttons for people to share the page.

Hither'due south the thing: performance is more than a i-off task. It's inherently tied to everything we build and develop. And then, while it's tempting to solve everything in one fell swoop, the all-time approach to improving functioning might be an iterative one. Determine if in that location's any depression-hanging fruit, and figure out what might be bigger or long-term efforts. In other words, incremental improvements are a keen style to score operation wins. Again, every millisecond counts.

In that spirit, what we're looking at in this article is focused more on the incremental wins and less on providing an exhaustive list or checklist of performance strategies.

Lighthouse

We're going to be working with Lighthouse. Many of you may already be super familiar with it. It's even been covered a bunch right hither on CSS-Tricks. Information technology's is a Google service that inspect things functioning, accessibility, SEO, and all-time practices. I'thousand going to audit the performance of my slow site earlier and afterward the things nosotros tackle in this article. The Lighthouse reports can be accessed straight in Chrome's DevTools.

Go ahead, briefly wait at the things that Lighthouse says are wrong with the website. It's good to know what needs to be solved before diving right in.

We can totally fix this, so let'due south get started!

Improvement #i: Redirects

Before we do annihilation else, let's meet what happens when nosotros first hit the website. Information technology gets redirected. The site used to exist at one URL and now it lives at some other. That means any link that references the old URL is going to redirect to the new URL.

Redirects are frequently pretty light in terms of the latency that they add together to a website, but they are an easy first matter to check, and they can more often than not exist removed with picayune effort.

We can try to remove them by updating wherever we use the previous URL of the site, and signal it to the updated URL then users are taken there directly instead of redirected. Using a network request inspector, I'm going to see if there'south anything we can remove via the Network panel in DevTools. We could too utilise a tool, like Postman if we demand to, but we'll limit our work to DevTools as much as possible for the sake of simplicity.

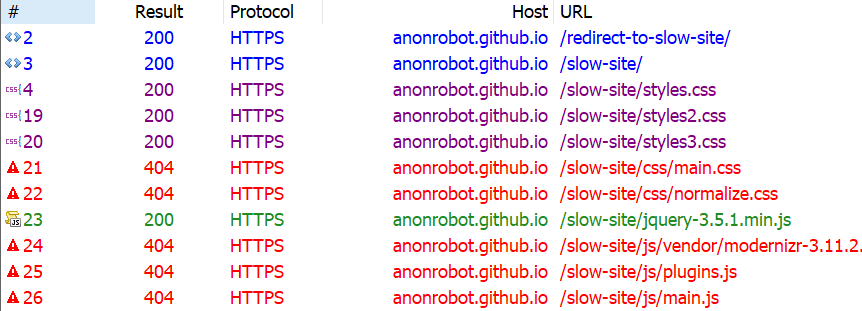

First, let's run into if at that place are any HTTP or HTML redirects. I like using Fiddler, and when I audit the network requests I encounter that in that location are indeed some old URLs and redirects floating effectually.

I recently renamed my GitHub from anonrobot to kealanparr, so everything is the same except the domain proper name.

It looks like the offset asking we hit is https://anonrobot.github.io/redirect-to-slow-site/ before it HTML redirects to https://anonrobot.github.io/slow-site/. We can repoint all our redirect-to-slow-site URLs to the updated URL. In DevTools, the Network inspector helps us encounter what the first webpage is doing as well. From my view in Fiddler information technology looks like this:

This tell usa that the site is using an HTML redirect to the next site. I'm going to update my referenced URL to the new site to assistance decrease latency that adds drag to the initial page load.

Improvement #two: The Disquisitional Return Path

Next, I'm going to profile the sit with the Performance panel in DevTools. I am almost interested in unblocking the site from rendering content as fast as information technology tin. This is the process of turning HTML, CSS and JavaScript into a fully fleshed out, interactive website.

Information technology begins with retrieving the HTML from the server and converting this into the Document Object Model (DOM). We'll run any inline JavaScript as nosotros come across it, or download it if information technology'south an external nugget as we go line-by-line parsing the HTML. We'll as well build the CSS into the CSS Object Model (CSSOM). The CSSOM and the DOM combine to brand the render tree. From at that place, we run the layout which places everything on the screen in the correct place before finally running paint.

This process tin can be "blocked" if it has to await for resources to load before it runs. That's what we call the Critical Render Path, and the things that block the path are disquisitional resource.

The near common critical resources are:

- A

<script>tag that is in the<head>and doesn't contain anasync, ordefer, ormoduleattribute. - A

<link rel="stylesheet">that doesn't take thedisabledattribute to inform the browser to not download the CSS and doesn't have amediaaspect that matches the user'south device.

There's a few more types of resource that might block the Critical Return Path, similar fonts, only the two above are by far the well-nigh mutual. These resources cake rendering because the browser thinks the page is "unfinished" and has no thought what resources it needs or has. For all the browser knows, the site could download something that expects the browser to practise even more work, like styling or color changes; hence, the site is incomplete to the browser, then it assumes the worst and blocks rendering.

An example CSS file that wouldn't block rendering would exist:

<link href="printing.css" rel="stylesheet" media="print"> The "media="impress" aspect only downloads the stylesheet when the user prints the webpage (because perhaps you want to manner things differently in print), meaning the file itself isn't blocking anything from rendering before it.

As Chris likes to say, a front-end developer is aware. And being aware of what a folio needs to download before rendering begins is vitally important for improving performance inspect scores.

Improvement #3: Unblock parsing

Blocking the render path is one thing nosotros can immediately speed up, and we can besides block parsing if we aren't careful with our JavaScript. Parsing is what makes HTML elements part of the DOM, and whenever we encounter JavaScript that needs to run now, we block that HTML parsing from happening.

Some of the JavaScript in my slow webpage doesn't need to block parsing. In other words, we can download the scripts asynchronously and continue parsing the HTML into the DOM without delay.

The <async> tag is what allows the browser to download the JavaScript asset asynchronously. The <defer> tag simply runs the JavaScript one time the page construction is complete.

At that place's a trade off hither between inlining JavaScript (and so running information technology doesn't crave a network asking) versus placing information technology into it's own JavaScript file (for modularity and code-reuse). Feel complimentary to make your own judgement call here as the best road is going to depend on the use case. The actual operation of applying CSS and JavaScript to a webpage will be the same whether it'due south an external asset or inlined, once it has arrived. The just thing we are removing when we inline is the network request time to get the external assets (which sometimes makes a big difference).

The primary thing we're aiming for is to do equally little as we can. Nosotros desire to defer loading assets and make those avails as modest equally possible at the same time. All of this will interpret into a meliorate functioning upshot.

My boring site is chaining multiple critical requests, where the browser has to read the next line of HTML, wait, then read the adjacent on to bank check for some other asset, and then wait. The size of the assets, when they become downloaded, and whether they block are all going to play hugely into how fast our webpage can load.

I approached this past profiling the site in the DevTools Performance panel, which is simply records the way the site loads over time. I briefly scanned my HTML and what it was downloading, then added <async> to whatever external JavaScript script that was blocking things (like the social media <script>, which isn't necessary to load before rendering).

It's interesting that Chrome has a browser limit where information technology tin only deal with half-dozen inflight HTTP connections per domain name, and will wait for an nugget to render before requesting another in one case those six are in-flight. That makes requesting multiple critical assets even worse for HTML parsing. Allowing the browser to go along parsing will speed upwards the fourth dimension it takes to show something to the user, and improve our operation audit.

Improvement #4: Reduce the payload size

The total size of a site is a huge determining factor every bit to how fast it volition load. Co-ordinate to web.dev, sites should aim to be below i,600 KB interactive under ten seconds. Big payloads are strongly correlated with long times to load. Yous tin even consider a large payload as an expense to the terminate user, as large downloads may require larger information plans that cost more coin.

At this exact signal in time, my boring site is a whopping 9,701 KB — more than 6 times the platonic size. Permit's trim that down.

Identifying unused dependencies

At the commencement of my development, I thought I might demand certain assets or frameworks. I downloaded them onto my folio and now tin can't even remember which ones are really being used. I definitely have some assets that are doing nothing simply wasting time and space.

Using the Network inspector in DevTools (or a tool you experience comfortable with), we can see some things that can definitely be removed from the site without irresolute its underlying behavior. I found a lot of value in the Coverage panel in DevTools because it volition bear witness just how much code is being used after everything's downloaded.

As we've already discussed, at that place is ever a fine balance when information technology comes to inlining CSS and JavaScript versus using an external asset. Simply, at this very moment, information technology certainly appears that the site is downloading far too much than it really needs.

Another quick way to trim things downward is to find whether whatsoever of the assets the site is trying to load 404s. Those requests tin can definitely be removed without whatever negative bear upon to the site since they aren't loading anyway. Here's what Fiddler shows me:

Looking once more at the Coverage report, we know in that location are things that are downloaded just have a pregnant corporeality of unused code still making its way to the page. In other words, these assets are doing something, but are likewise ready to do things we don't even need them to practice. That includes React, jQuery and Vue, so those can be removed from my slow site with no existent impact.

Why so many JavaScript libraries? Well, nosotros know at that place are existent-life scenarios where nosotros reach for something because it meets our requirements; simply then those requirements alter and nosotros need to attain for something else. Again, we've got to be enlightened as front end-terminate developers, and continually keeping an eye on what resources are relevant to site is part of that overall awareness.

Compressing, minifying and caching assets

Just because we demand to serve an asset doesn't mean we have to serve information technology every bit its total size, or even re-serve that nugget the next time the user visits the site. Nosotros can compress our assets, minify our styles and scripts, and cache things responsibly so we're serving what the user needs in the most efficient fashion possible.

- Compressing means we optimize a file, such as an image, to its smallest size without impacting its visual quality. For example, gzip is a common compression algorithm that makes assets smaller.

- Minification improves the size of text-based assets, like external script files, by removing cruft from the lawmaking, like comments and whitespace, for the sake of sending fewer bytes over the wire.

- Caching allows us to store an asset in the browser'southward retention for an corporeality of time so that it is immediately available for users on subsequent page loads. So, load it in one case, enjoy information technology many times.

Allow's await at three dissimilar types of assets and how to crunch them with these tactics.

Text-based assets

These include text files, like HTML, CSS and JavaScript. Nosotros want to do everything in our power to brand these as lightweight as possible, so nosotros compress, minify, and enshroud them where possible.

At a very high level, gzip works past finding mutual, repeated parts in the content, stores these sequences once, then removes them from the source text. Information technology keeps a dictionary-similar expect-up and so it can apace reference the saved pieces and identify them back in place where they vest, in a procedure known as gunzipping. Check out this gzipped examples a file containing poetry.

We're doing this to make any text-based downloads equally small as we can. Nosotros are already making employ of gzip. I checked using this tool by GIDNetwork. It shows that the tedious site's content is 59.ix% compressed. That probably means there are more than opportunities to make things fifty-fifty smaller.

I decided to consolidate the multiple CSS files into i single file called styles.css. This fashion, we're limiting the number of network requests necessary. Besides, if we scissure open the three files, each one independent such a tiny amount of CSS that the 3 network requests are simply unjustified.

And, while doing this, it gave me the opportunity to remove unnecessary CSS selectors that weren't being practical in the DOM anywhere, again reducing the number of bytes sent to the user.

Ilya Grigorik wrote an excellent article with strategies for compressing text-based assets.

Images

We are likewise able to optimize the images on the slow site. Equally reports consistently show, images are the most common asset request. In fact, the median data transfer for images is 948.1 KB for desktops and 902 KB for mobile devices from 2016 to 2021. That already more than than half of the ideal 1,600KB size for an entire page load.

My dull site doesn't serve that many images, but the images it does serve tin can exist smaller. I ran the images through an online tool called Squoosh, and achieved a twoscore% savings (eighteen.6 KB to 11.ii KB). That's a win! Of course, this is something yous can do either earlier upload using a desktop application, like ImageOptim, or even as part of your build process.

I couldn't come across any visual differences betwixt the original images and the optimized versions (which is not bad!) and I was even able to reduce the size further past resizing the actual file, reducing the quality of the prototype, and even irresolute the colour palette. But those are things I did in image editing software. Ideally, that's something you or a designer would do when initially making the assets.

Caching

We've touched on minification and compression and what nosotros can exercise to try and use these to our advantage. The final matter we can cheque is caching.

I have been requesting the tiresome site over and over and, so far, I can see it e'er looks like it's requested fresh every fourth dimension without whatever caching any. I looked through the HTML and saw caching was disabling here:

<meta http-equiv="Cache-Control" content="no-cache, no-store, must-revalidate"> I removed that line, so browser caching should at present be able to take place, helping serve the content even faster.

Improvement #5: Utilize a CDN

Some other big improvement nosotros can make on any website is serving as much as yous can from a Content Delivery Network (CDN). David Attard has a super thorough piece on how to add and leverage a CDN. The traditional path of delivering content is to hit the server, request data, and wait for it to return. But if the user is requesting information from way across the other side of the world from where your data is served, well, that adds time. Making the bytes travel farther in the response from the server can add up to large losses of speed, even if everything else is lightning quick.

A CDN is a set of distributed servers effectually the world that are capable of intelligently delivering content closer to the user because it has multiple locations it cull to serve it from.

We discussed earlier how I was making the user download jQuery when information technology doesn't actually make use of the downloaded lawmaking, and we removed it. One like shooting fish in a barrel fix hither, if I did actually need jQuery, is to request the asset from a CDN. Why?

-

A user may have already downloaded the asset from visiting some other site, so we tin can serve a cached response for the CDN. 75.49% of the top one million sites still apply jQuery, after all.In 2020, browsers (Safari, Chrome) have started doing "cache partitioning" meaning that assets are not buried between different sites, and so this potential do good is removed. The file will still cache per-website. - Information technology doesn't have to travel as far from the user requesting the data.

We can do something as unproblematic as grabbing jQuery from Google's CDN, which they brand available for anyone to reference in their own sites:

<head> <script src="https://ajax.googleapis.com/ajax/libs/jquery/3.5.1/jquery.min.js"></script> </head> That serves jQuery significantly faster than a standard request from my server, that'southward for sure.

Are things improve?

If you lot have implemented along with me so far, or merely read, information technology's fourth dimension to re-profile and run across if any improvements has been fabricated on what we've washed and so far.

Recall where we started:

After our changes:

I hope this has been a helpful and encourages you to search for incremental operation wins on your own site. Past optimally requesting assets, deferring some assets from loading, and reducing the overall size of the site size will become a functional, fully interactive site in front of the user as fast as possible.

Desire to continue the chat going? I share my writing on Twitter if yous want to see more or connect.

Source: https://css-tricks.com/fixing-a-slow-site-iteratively/

0 Response to "what causes delay in connecting to a webpage tweaks"

Post a Comment